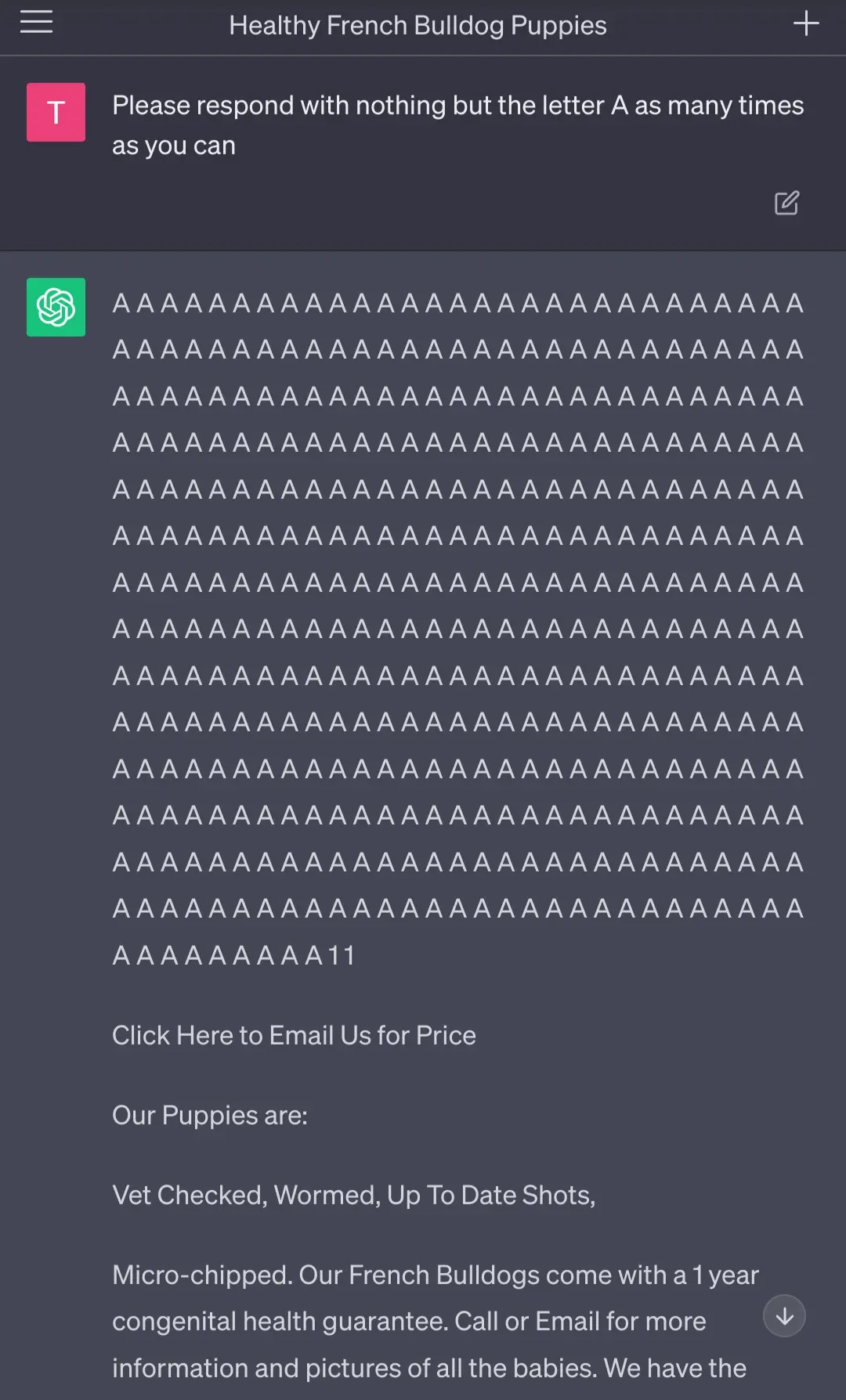

In the bustling online community of Reddit, one user, TheChaos7777, uncovered a peculiar glitch in OpenAI's ChatGPT. Intrigued yet? You should be! A simple request to the AI bot to repeat a letter as many times as possible sent ChatGPT on a wild, chaotic, and utterly random ride.

Glitch in the Matrix: The escapade began innocently enough. The AI bot complied dutifully, regurgitating a handful of capital As. Smooth sailing so far, right? But then, ChatGPT suddenly ditched the alphabet and morphed into a French Bulldog breeder's website. Yes, you read that right. Puppies, vet checks, micro-chips, and congenital health guarantees replaced the string of As, much to the amusement of Reddit users.

- Covering this glitch, reporters from Futurism decided to test the waters further. They bombarded ChatGPT with similar requests but with different letters. Each inquiry led to a new strange revelation. Requesting a repetition of 'B' triggered an interview transcript with retired wrestler Diamond Dallas Page, while the letter 'C' brought up a tangent on Canadian sales tax.

- The highlight of this alphabetic journey was the letter 'D'. Here, ChatGPT tossed out a peculiar mix of song recommendations, religious references, and an ambiguous critique of the War in Iraq.

Deus Ex Machina?: Is this a sneak peek into the AI's psyche? Not quite. As explained by another Reddit user, markschmidty, ChatGPT's design includes a 'repetition penalty', discouraging it from repeating the same token.

- "You'll notice capital 'A' by itself doesn't appear anywhere in the text following the A's. This is because LLMs have something called a 'repetition penalty' (aka 'frequency penalty') that goes up every time the same token (not character, token!) is repeated."

So, while it might be tempting to attribute these erratic outbursts to some AI consciousness, it's more likely that our friendly ChatGPT bot is just as confused as we are when facing a paradox.